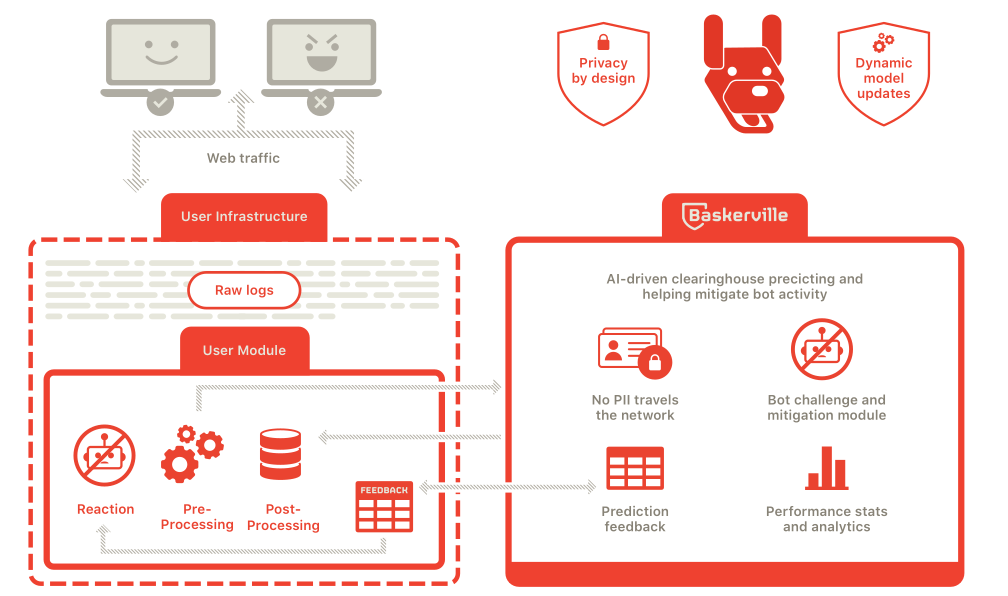

Baskerville is a machine operating on the Deflect network that protects sites from hounding, malicious bots. It’s also an open source project that, in time, will be able to reduce bad behaviour on your networks. Baskerville responds to web traffic, analyzing requests in real-time, and challenging those acting suspiciously.

Baskerville performance

Baskerville is a network traffic anomaly detector, used to identify and challenge malicious IP behaviour.

53,315

Challenged (past 24 hours)99%

Precision (past 24 hours)65

Passed Challenge (past 24 hours)Conventional machine learning approaches for network attack detection are based around recognizing patterns of behaviour, building and training a classification model. This requires large labelled data sets. However, the rapid pace and unpredictability of cyber-attacks make this labelling impossible in real time as well as incredibly time consuming post-incident. In addition, a signature-based approach is naturally biased towards previous incidents and can be out-manoeuvred by new, previously unseen, patterns. Baskerville is built on an unsupervised anomaly detection algorithm, Isolation Forest, which does not require a labelled dataset for training. We improve on the original algorithm in order to support not only numerical but also string features from the exhibited behaviour itself.

We’ve trained Baskerville to recognize what legitimate traffic on our network looks like, and how to distinguish it from malicious requests attempting to disrupt our clients’ websites. Baskerville has turned out to be very handy for mitigating DDoS attacks, and for correctly classifying other types of malicious behaviour. A few months ago, Baskerville passed an important milestone – making its own decisions on traffic deemed anomalous. The quality of these decisions (recall) is high and Baskerville has already successfully mitigated many sophisticated real-life attacks.

Baskerville is also a complete pipeline which receives as input incoming web logs, either from a Kafka topic, from a locally saved raw log file, or from log files saved to an Elasticsearch instance. It processes these logs in batches, forming request sets by grouping them by requested host and requesting IP. It subsequently extracts features for these request sets and predicts whether they are malicious or benign using a model that was trained offline on previously observed and labelled data. Baskerville saves all the data and results to a Postgres database, publishing metrics (e.g. number of logs processed, percentage predicted malicious, percentage predicted benign, processing speed etc) for consumption bu visualization tools like Prometheus and Grafana dashboard.