Whether you are the owner of an independent media site telling the stories no one else will, a non-profit or community organization informing its members of available resources and events, or a company of any size, ensuring that your website stays protected and online is of the utmost importance.

Understanding the difference between indirect and direct vulnerability

Many indirect cybersecurity attacks – malware, phishing, trojans, data breaches, and ransomware – can be prevented by raising awareness in an organization and cultivating best practices around clicking on suspect links or downloading files from unreliable sources.

However, your website can also be subjected to direct DDoS attacks. This is why its security should be managed by a dedicated technical support team that you trust, one that matches your values of transparency, privacy, and social responsibility.

What is a DDoS attack?

Unlike attacks that rely on individuals clicking on suspicious links in their email or downloading files from untrusted sites, DDoS attacks are direct assaults on the IT infrastructure of an organization.

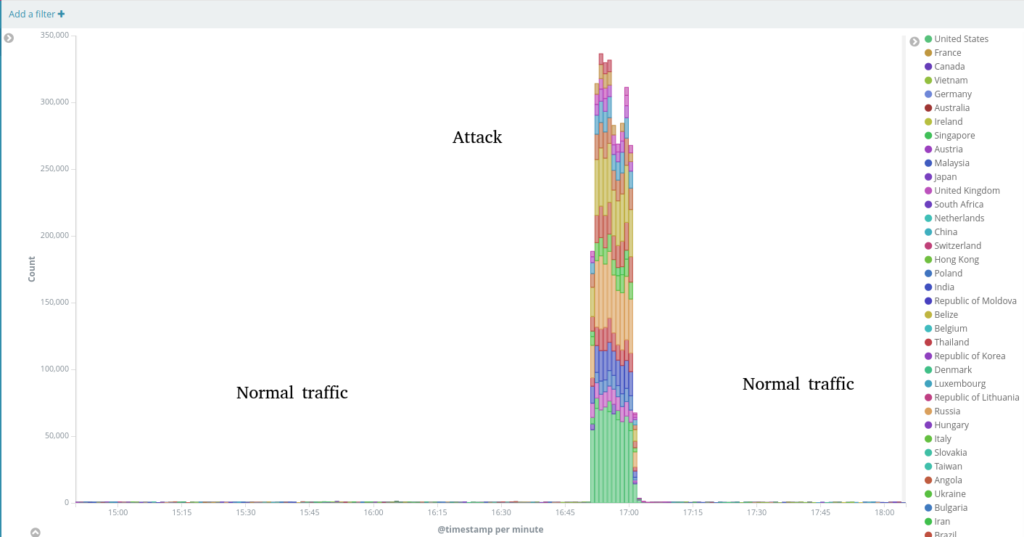

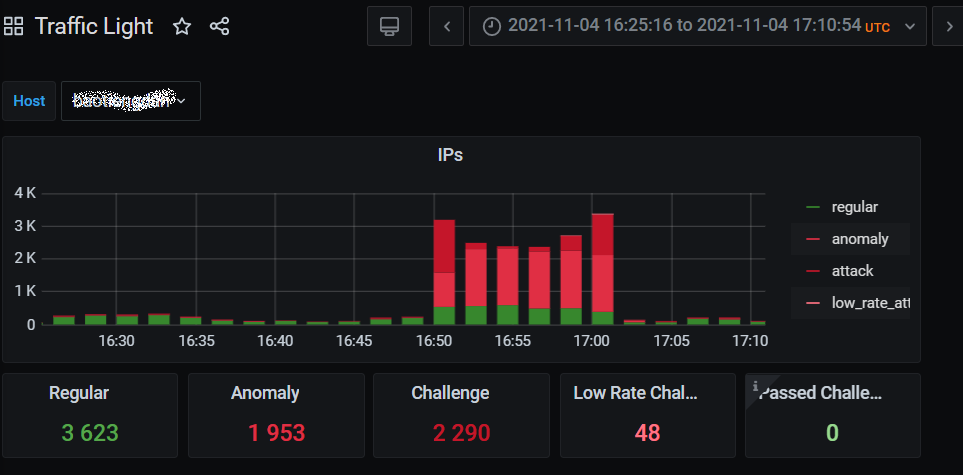

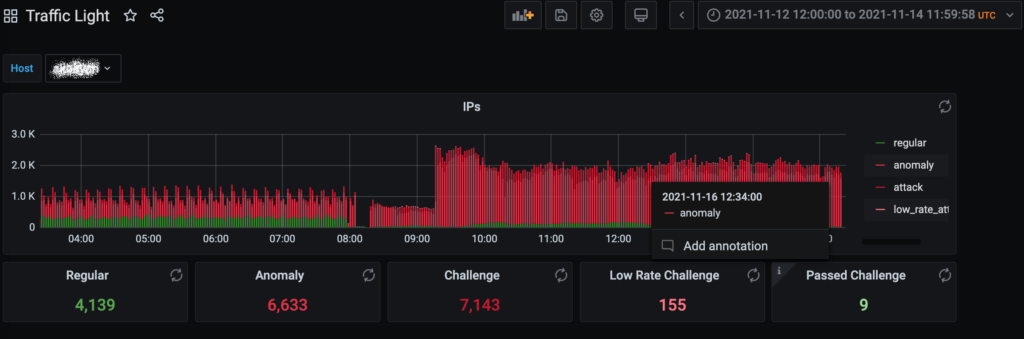

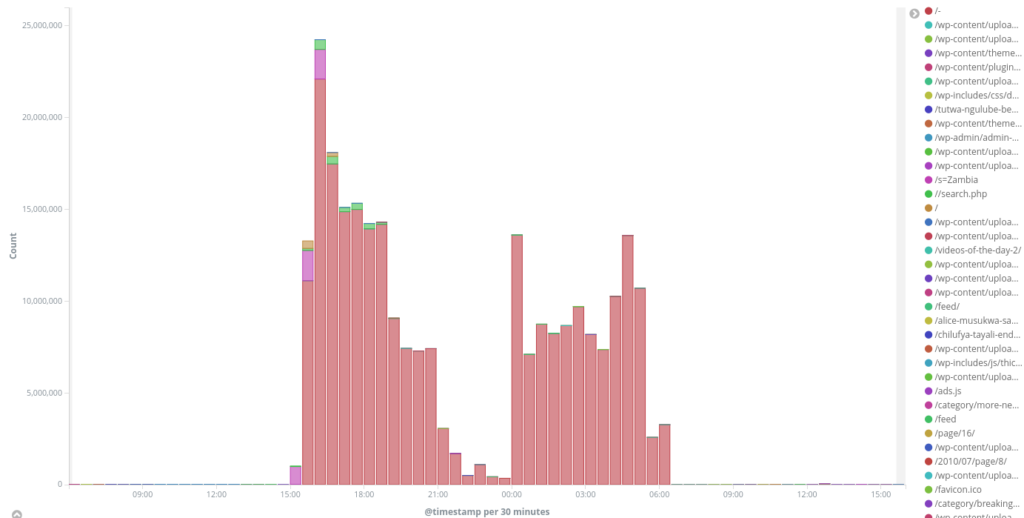

A DDoS (distributed denial of service) attack is like the early pandemic grocery store rush of customers piling up and blocking the door in a mad rush for that last roll of toilet paper. Except, when all that traffic hits your site, they are not customers – they are bots. And their main purpose is to overwhelm your site and knock it off the web. Without protection, your site can be incapacitated by an attack and shut down completely.

My site won’t get attacked because we’re too small

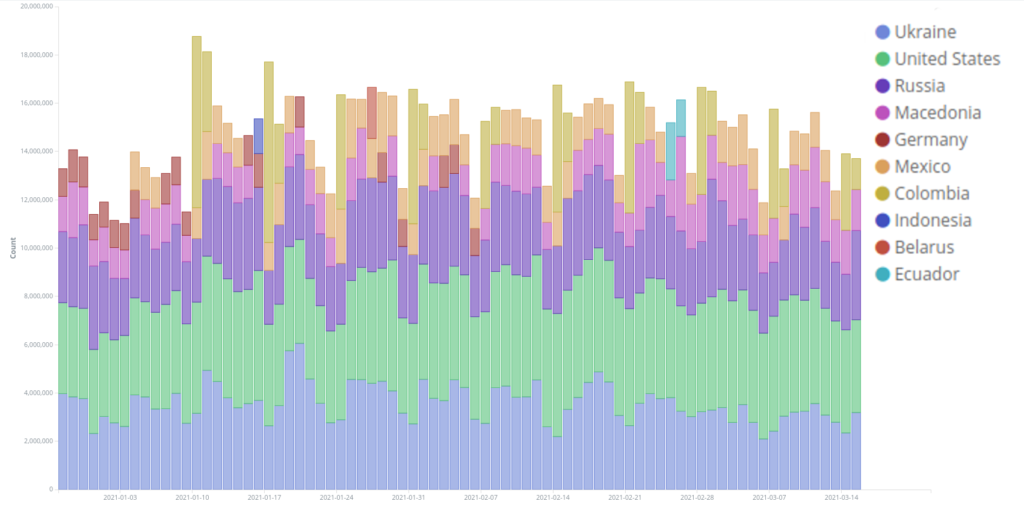

A DDoS attack can happen to anyone, no matter the size of your site or the number of visitors. In fact, many small sites, especially independent media and grassroots organizations, are particularly vulnerable to attacks because their voices often oppose a powerful government, a military, or a popular consensus. In some cases, these sites are targeted by hate groups, as was the case when we protected Black Lives Matter from attacks which occurred over 100 times a day on their site for seven months in 2016.

One good question to ask: would anyone like to silence your voice? If the answer is yes, you likely already know the importance of DDoS protection. We provide the same level of protection for non-profits and independent media sites as we do for commercial clients. Learn more about our free protection for eligible groups here.

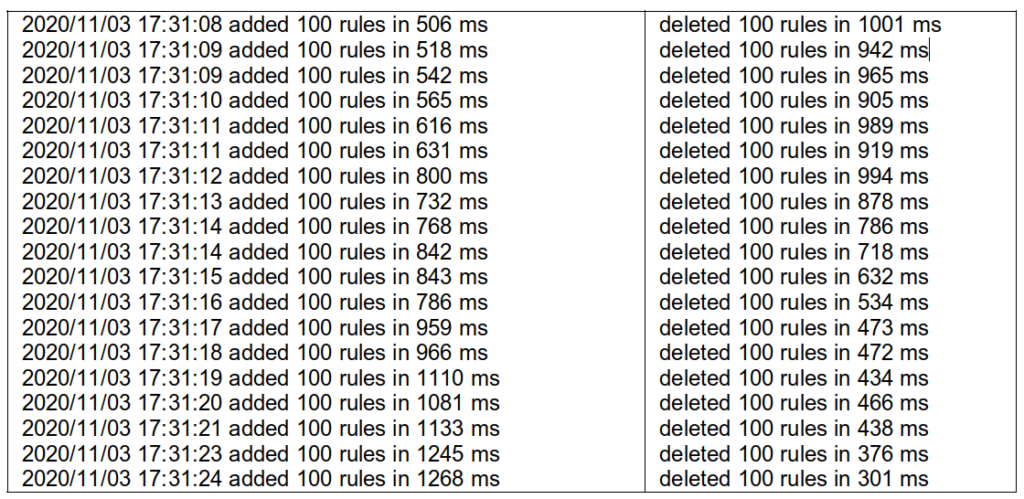

There aren’t many DDoS attacks, so I’m not likely to need protection

According to a recent white paper released by CISCO, DDoS attacks have been getting larger and more frequent each year. In 2018, there were 7.9 million DDoS attacks, and by 2023, they estimate the number will double to 15.4 million.

Aren’t bigger names better when it comes to DDoS protection?

No. Deflect has the capacity to handle protection of any site, and our experience mitigating attacks on some of the most vulnerable sites in countries all around the world has made us experts in the field.

According to Ali Reza, “IPOS directly benefited from Deflect’s expertise and professionalism when our main website was subject to an unprecedented attack. At the time the services of similar companies including CloudFlare and Google PageSpeed failed to protect IPOS’ election tracking poll against a major DDOS attack during the 2013 presidential elections in Iran. However, Deflect were able to quickly set up a CDN front and accept traffic from IPOS’ main domain and fight back against the attack.”

My industry won’t be attacked. It’s banks and governments that are most often subjected to DDoS attacks

While banks and governments have indeed been subjected to DDoS attacks, no industry is DDoS-proof. According to a 2019 global DDos Threat Landscape report by Imperva, attacks have occurred in most markets, including adult entertainment, gaming, news, society, lifestyle, retail, travel, and gambling. If your site is not in those markets, it does not mean you are safe from a DDoS attack.

Motivations for DDoS attacks

As the same report points out, the motivations for DDoS attacks are many, and may include:

Business competition – a competitor might hire a botnet to bring down your site.

Extortion – ecommerce sites are particularly dependent on the uptime of their sites for generating revenue. This makes them particularly susceptible to extortion for the promise not to attack their site.

Hacktivism – political, media, or corporate websites can be targeted by hacktivists to protest against their actions.

Vandalism – disgruntled users or random offenders often attack gaming services or other high profile clients.

To this list, we would add:

Censorship – these attacks could be committed by individuals, governments, or militaries against groups for their social, environmental, human rights, or political movements with the goal of silencing their voices. As you can imagine, outside of North America, some of the most consistent attacks against the most vulnerable peoples and groups, like our client ARNO, in Myanmar, are of this type.

Transparent, Trusted, Ethical Protection

But I’m already protected by one of the more popular guys for “free.”

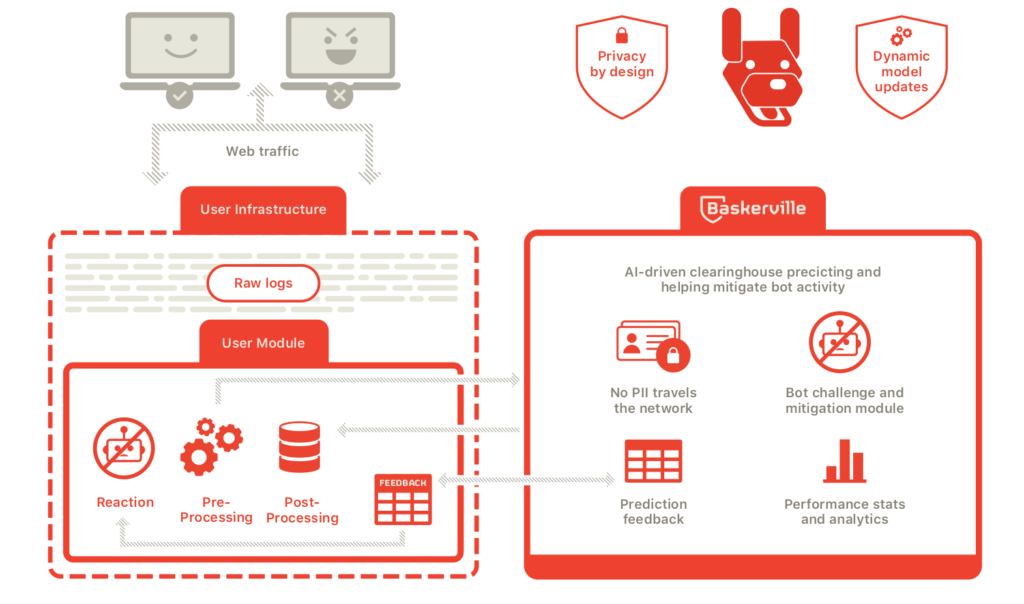

Large providers often claim to offer DDoS protection for “free.” To provide that service, however, many enter into agreements with venture capitalists, and the trade-off for their “free” protection is the privacy of your data, which can be shared or sold.

Before the Cambridge Analytica scandal, many of us would mindlessly scroll down and agree to all terms and conditions, but for independent media, nonprofit and community organizations, and companies, data should always be kept safe and private. When choosing who will protect you from DDoS attacks, read policies carefully to find out if you’re giving up anything for “free” protection. Our protection for non-profits, NGO’s, and independent media really is free.

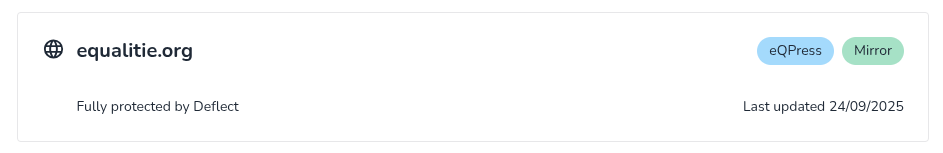

Deflect Pricing

At Deflect, we have always provided our services for free to eligible non-profits and independent media groups, without compromising your data privacy. Our principles, privacy policy, and conditions are transparent. For commercial sites, our pricing is transparent. Unlike most of our competitors, we charge for the number of unique monthly IPs to your site, not for multiple visits from one IP, or traffic from attacks.

There are other limits to the “free” protection provided by some of our competitors. On more than one occasion, clients who were protected by our competitors have come to us after being attacked and told they either needed to upgrade to a premium service or leave, just at the moment when they were most vulnerable.

We at Deflect consider ourselves to be the #1 ethical cybersecurity protection company in the world. We have over 10 years experience protecting the most vulnerable and most attacked non-profit and independent media voices across the world in over 80 countries.

In addition to our commitment to transparent policies and privacy, we have a clear no-hate, no-incitation-of-violence policy. For us, this is a no-brainer. If your site breaks this policy, you will be asked to leave.

We are socially responsible. For every paying commercial client we protect, we are able to extend the same protections for free to important groups that otherwise could not afford protection, or may get kicked off the “free” protection of our competitors because the work they do makes them more vulnerable to attacks.

If you have more questions, or you’d like more information about Deflect’s non-profit, business, or partner programs, you can reach out to us by sending us a message here or by reach out to terry@deflect.network for non-profit questions, and garfield@deflect.network for business and partner programs.